No edit summary |

No edit summary |

||

| (8 intermediate revisions by 2 users not shown) | |||

| Line 1: | Line 1: | ||

= Taskotron Overview = | |||

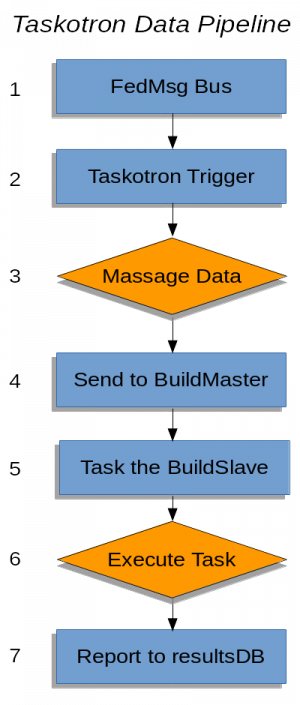

[https://taskotron.fedoraproject.org/ Taskotron] is the CI system Fedora currently uses for running automated tests. Currently it runs tests for all Fedora packages, docker base images, as well as Atomic and Cloud images. Taskotron is able to support a wide variety of tasks and reporting mechanisms. While it's fairly straight forward to [https://qa.fedoraproject.org/docs/libtaskotron/latest/writingtasks.html write tasks] for local execution, it's not entirely clear how your task fits into Taskotron in Production. This document aims to be an overview of how data gets from one component to another inside a production instance of Taskotron. Here's a high level flow chart: | |||

Taskotron | [[File:TaskotronPipelineOverview.png|300px|An overview of Taskotron dataflow]] | ||

The following sections define what happens at each numbered stage in the flow chart. | |||

=== 1) FedMsg Input === | === 1) FedMsg Input === | ||

| Line 19: | Line 19: | ||

CloudComposeJobTrigger. | CloudComposeJobTrigger. | ||

3) After an fedmsg is consumed, the "JobTrigger" parses the data from the FedMsg to prepare it | === 3) Massage the Data === | ||

After an fedmsg is consumed, the "JobTrigger" parses the data from the FedMsg to prepare it | |||

for use by the execution engine. In our CloudComposeJobTrigger example, it first checks to see if | for use by the execution engine. In our CloudComposeJobTrigger example, it first checks to see if | ||

the message is saying that the Pungi compose is "FINISHED." If it's "FINISHED," it'll continue | the message is saying that the Pungi compose is "FINISHED." If it's "FINISHED," it'll continue | ||

to process the data, otherwise it discards the message. That particular fedmsg only contains the | to process the data, otherwise it discards the message. That particular fedmsg only contains the | ||

following fields | following fields<ref>These are the only fields we care about for this example. These fields are inside the 'msg' block of the emitted fedmsg. You can see a full [https://apps.fedoraproject.org/datagrepper/id?id=2017-f853e69c-5e95-43b9-9471-101f508efb6d&is_raw=true&size=extra-large example here].</ref>: 'status,' 'location,' and 'compose_id.' | ||

When the JobTrigger is done, it returns a python dictionary with the following fields (this is not | When the JobTrigger is done, it returns a python dictionary with the following fields (this is not | ||

| Line 33: | Line 32: | ||

* message_type: the type of message, which is CloudCompose or AtomicCompose for our example | * message_type: the type of message, which is CloudCompose or AtomicCompose for our example | ||

* item: This is the data we want to hand to our task for execution. In our case, it's a URL that points to the qcow2 image the Pungi compose generated. | * item: This is the data we want to hand to our task for execution. In our case, it's a URL that points to the qcow2 image the Pungi compose generated. | ||

* item_type: What kind of item the task will run on, in our case a 'compose' | * item_type: What kind of item the task will run on, in our case a 'compose' <ref>There is a finite number of available types to choose from, and these are all located in the libtaskotron.check component, and determines what type of report (reporttype) is sent to resultsDB.</ref> | ||

* name: The human readable name of what's being passed in the item. In our example it'd be Fedora-Cloud or Fedora-Atomic. | * name: The human readable name of what's being passed in the item. In our example it'd be Fedora-Cloud or Fedora-Atomic. | ||

* version: What version of the thing are we using? In our example it'll be 24 (or whatever release of Fedora this fedmsg was for). Other JobTriggers operate on packages, and the version and release fields would reflect which package is being referenced in the fedmsg. | * version: What version of the thing are we using? In our example it'll be 24 (or whatever release of Fedora this fedmsg was for). Other JobTriggers operate on packages, and the version and release fields would reflect which package is being referenced in the fedmsg. | ||

| Line 41: | Line 40: | ||

file. For our example, it looks like this: | file. For our example, it looks like this: | ||

<pre>- when: | <pre> | ||

- when: | |||

message_type: AtomicCompose | message_type: AtomicCompose | ||

do: | do: | ||

- {tasks: [upstream-atomic, fedora-cloud-tests]}</pre> | - {tasks: [upstream-atomic, fedora-cloud-tests]} | ||

</pre> | |||

The 'message_type' inside the dict the JobTrigger generated maps directly to the trigger rule. The next question is where does the task itself live and how does trigger know what it is? Each | The 'message_type' inside the dict the JobTrigger generated maps directly to the trigger rule. The next question is where does the task itself live and how does trigger know what it is? Each | ||

task lives in it's own repository, which taskotron mirrors locally | task lives in it's own repository, which taskotron mirrors locally <ref>Taskotron mirrors task repos with GrokMirror. All existing tasks currently running can be found in the taskotron ansible repo: https://infrastructure.fedoraproject.org/cgit/ansible.git/tree/inventory/group_vars/taskotron-prod#n19</ref> to speed up execution times. For our example, upstream-atomic and fedora-cloud-tests live on pagure<ref>https://pagure.io/taskotron/task-upstream-atomic and https://pagure.io/taskotron/task-fedora-cloud-tests</ref>. | ||

Let's take a look at what exactly a "task" is and what they're made of. There are already docs for this | Let's take a look at what exactly a "task" is and what they're made of. There are already [http://libtaskotron.readthedocs.io/en/latest/writingtasks.html docs for this], but here's a brief overview. Each task contains at least 2 things: a task.yml file | ||

and some bit of code that is the task. The task.yml contains all the information required by the runner to execute the task. Our fedora-cloud-tests example looks like this: | and some bit of code that is the task. The task.yml contains all the information required by the runner to execute the task. Our fedora-cloud-tests example looks like this: | ||

<pre>--- | <pre> | ||

--- | |||

name: fedora-cloud-tests | name: fedora-cloud-tests | ||

desc: Run the Fedora Cloud or Atomic tests against a qcow2 image | desc: Run the Fedora Cloud or Atomic tests against a qcow2 image | ||

| Line 84: | Line 86: | ||

- name: report results to resultsdb | - name: report results to resultsdb | ||

resultsdb: | resultsdb: | ||

results: ${output}</pre> | results: ${output} | ||

</pre> | |||

The 'environment' section defines what the runner needs to have installed in order to run. Namely it needs to have the ansible, testcloud and git packages installed on the buildslave to run the | The 'environment' section defines what the runner needs to have installed in order to run. Namely it needs to have the ansible, testcloud and git packages installed on the buildslave to run the | ||

task. The 'actions' section defines what steps the task needs to take in order to run. You see a couple different items in there, namely the 'shell' and 'python' directives. The 'shell' calls | task. The 'actions' section defines what steps the task needs to take in order to run. You see a couple different items in there, namely the 'shell' and 'python' directives. The 'shell' calls | ||

act exactly like you'd expect, they run raw shell commands | act exactly like you'd expect, they run raw shell commands<ref>Astute readers will also see the use of '- ignorereturn' which causes the runner to continue if the invoked command fails for some reason.</ref>. The 'python' directive takes a couple options to run. | ||

First is 'file' which tells it which python file to execute (this is located in the same directory as task.yml). 'callable' is which method inside the 'file' to call. Those are the only two options | First is 'file' which tells it which python file to execute (this is located in the same directory as task.yml). 'callable' is which method inside the 'file' to call. Those are the only two options | ||

| Line 94: | Line 97: | ||

set as many of these as you like - they just have to match the keyword arguments required as input to the method referenced in 'callable'). | set as many of these as you like - they just have to match the keyword arguments required as input to the method referenced in 'callable'). | ||

'${compose}' is a passed in argument from the runner | '${compose}' is a passed in argument from the runner <ref>A local run would look like this:<pre>"runtask -t compose -i <url to qcow2 image> runtask.yml"</pre></ref>, and '${artifactsdir}' is an absolute path to where the runner stores task artifacts (like log files), which is configured in the libtaskotron | ||

installation. The 'item' in the data dictionary created by the JobTrigger ends up in the '${compose}' variable for our task when it's run by the execution engine. | installation. The 'item' in the data dictionary created by the JobTrigger ends up in the '${compose}' variable for our task when it's run by the execution engine. | ||

| Line 100: | Line 103: | ||

directive), which is then referenced for submission to resultsDB. Now that we've had an overview of what a task is, let's get back to the rest of the overview. | directive), which is then referenced for submission to resultsDB. Now that we've had an overview of what a task is, let's get back to the rest of the overview. | ||

4-5) These two things, the task to be run, and the data dict created by the JobTrigger, are bundled together and sent to the execution engine to be queued for execution. The execution engine gets | === 4-5) Queue the Task === | ||

These two things, the task to be run, and the data dict created by the JobTrigger, are bundled together and sent to the execution engine to be queued for execution. The execution engine gets | |||

these two bits of information, and then finds an available job runner to execute the task on. | these two bits of information, and then finds an available job runner to execute the task on. | ||

6) The job runner (buildslave) receives the data from the buildmaster and then executes the task. If we were to run this locally, it would look like this: | === 6) Run the Task === | ||

The job runner (buildslave) receives the data from the buildmaster and then executes the task. If we were to run this locally, it would look like this: | |||

runtask -t data['message_type'] -i data['item'] runtask.yml | runtask -t data['message_type'] -i data['item'] runtask.yml | ||

| Line 110: | Line 115: | ||

by the task in task.yml as '${compose}' which corresponds to the 'message_type' passed in via the '-t' flag. | by the task in task.yml as '${compose}' which corresponds to the 'message_type' passed in via the '-t' flag. | ||

7) The actual reporting of the results to resultsDB is handled in the task itself, but the job runner (buildslave) will report that it's completed running the task to the buildmaster so it knows | === 7) Report to resultsDB === | ||

The actual reporting of the results to resultsDB is handled in the task itself, but the job runner (buildslave) will report that it's completed running the task to the buildmaster so it knows | |||

that the buildslave is available for another task. | that the buildslave is available for another task. | ||

== Conclusion == | |||

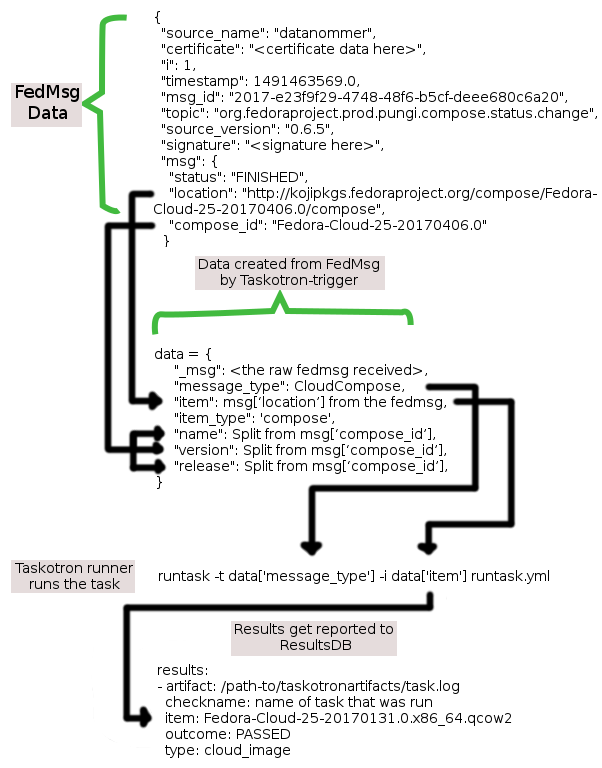

That's the basic overview for how data flows through Taskotron. As one final note, I've also added a graphic showing actual data, and what it looks like at each stage. Drop by the #fedora-qa channel on Freenode if you have any questions! Happy hacking! | |||

[ | [[File:DataTransformationTaskotron.png| Data transformation through Taskotron]] | ||

<references/> | |||

Latest revision as of 14:36, 7 April 2017

Taskotron Overview

Taskotron is the CI system Fedora currently uses for running automated tests. Currently it runs tests for all Fedora packages, docker base images, as well as Atomic and Cloud images. Taskotron is able to support a wide variety of tasks and reporting mechanisms. While it's fairly straight forward to write tasks for local execution, it's not entirely clear how your task fits into Taskotron in Production. This document aims to be an overview of how data gets from one component to another inside a production instance of Taskotron. Here's a high level flow chart:

The following sections define what happens at each numbered stage in the flow chart.

1) FedMsg Input

Pretty much every aspect of Fedora infrastructure emits status messages to the FedMsg bus. We use this bus to coordinate and kick off a variety of tasks, from desktop notifications to kicking off automated testing. It's a constant stream of event data. Taskotron listens to the FedMsg bus with the "taskotron-trigger" package.

2) Taskotron-trigger

Trigger sets up a local fedmsg-hub with several custom "consumers." These comsumers look for specific FedMsg topics. For instance, the cloud_compose_complete_msg.py jobtrigger listens for 'org.fedoraproject.prod.pungi.compose.status.change' messages, and then kicks off the CloudComposeJobTrigger.

3) Massage the Data

After an fedmsg is consumed, the "JobTrigger" parses the data from the FedMsg to prepare it for use by the execution engine. In our CloudComposeJobTrigger example, it first checks to see if the message is saying that the Pungi compose is "FINISHED." If it's "FINISHED," it'll continue to process the data, otherwise it discards the message. That particular fedmsg only contains the following fields[1]: 'status,' 'location,' and 'compose_id.'

When the JobTrigger is done, it returns a python dictionary with the following fields (this is not an exhaustive list - these can be anything):

- _msg: The raw fedmsg we parsed

- message_type: the type of message, which is CloudCompose or AtomicCompose for our example

- item: This is the data we want to hand to our task for execution. In our case, it's a URL that points to the qcow2 image the Pungi compose generated.

- item_type: What kind of item the task will run on, in our case a 'compose' [2]

- name: The human readable name of what's being passed in the item. In our example it'd be Fedora-Cloud or Fedora-Atomic.

- version: What version of the thing are we using? In our example it'll be 24 (or whatever release of Fedora this fedmsg was for). Other JobTriggers operate on packages, and the version and release fields would reflect which package is being referenced in the fedmsg.

- release: This is very similar to version, and for our example it would be the date the compose was executed on. For example, 20170310.0.

Once this data is ready, it's paired with the task defined in the 'trigger_rules.yml' config file. For our example, it looks like this:

- when:

message_type: AtomicCompose

do:

- {tasks: [upstream-atomic, fedora-cloud-tests]}

The 'message_type' inside the dict the JobTrigger generated maps directly to the trigger rule. The next question is where does the task itself live and how does trigger know what it is? Each task lives in it's own repository, which taskotron mirrors locally [3] to speed up execution times. For our example, upstream-atomic and fedora-cloud-tests live on pagure[4].

Let's take a look at what exactly a "task" is and what they're made of. There are already docs for this, but here's a brief overview. Each task contains at least 2 things: a task.yml file and some bit of code that is the task. The task.yml contains all the information required by the runner to execute the task. Our fedora-cloud-tests example looks like this:

---

name: fedora-cloud-tests

desc: Run the Fedora Cloud or Atomic tests against a qcow2 image

maintainer: roshi

environment:

rpm:

- ansible

- testcloud

- git

actions:

- shell:

# Clone the upstream test repo

- git clone https://pagure.io/fedora-qa/cloud-atomic-ansible-tests.git

- python:

file: run_cloud_tests.py

callable: run

test_image: ${compose}

artifactsdir: ${artifactsdir}

export: output

- shell:

- ignorereturn:

- rm inventory

- shell:

- rm -rf cloud-atomic-ansible-tests

- name: report results to resultsdb

resultsdb:

results: ${output}

The 'environment' section defines what the runner needs to have installed in order to run. Namely it needs to have the ansible, testcloud and git packages installed on the buildslave to run the task. The 'actions' section defines what steps the task needs to take in order to run. You see a couple different items in there, namely the 'shell' and 'python' directives. The 'shell' calls act exactly like you'd expect, they run raw shell commands[5]. The 'python' directive takes a couple options to run.

First is 'file' which tells it which python file to execute (this is located in the same directory as task.yml). 'callable' is which method inside the 'file' to call. Those are the only two options required when it comes to running python files inside a task. The last two options (test_image and artifactsdir) are keyword arguments that get passed into the method 'callable' points to (you can set as many of these as you like - they just have to match the keyword arguments required as input to the method referenced in 'callable').

'${compose}' is a passed in argument from the runner [6], and '${artifactsdir}' is an absolute path to where the runner stores task artifacts (like log files), which is configured in the libtaskotron installation. The 'item' in the data dictionary created by the JobTrigger ends up in the '${compose}' variable for our task when it's run by the execution engine.

The last section of the task.yml file is how we get the restults of the task into resultsDB. The output of the python call gets save to 'output' (in the 'export: output' section of the python directive), which is then referenced for submission to resultsDB. Now that we've had an overview of what a task is, let's get back to the rest of the overview.

4-5) Queue the Task

These two things, the task to be run, and the data dict created by the JobTrigger, are bundled together and sent to the execution engine to be queued for execution. The execution engine gets these two bits of information, and then finds an available job runner to execute the task on.

6) Run the Task

The job runner (buildslave) receives the data from the buildmaster and then executes the task. If we were to run this locally, it would look like this:

runtask -t data['message_type'] -i data['item'] runtask.yml

The '-t' flag is the type of item we want to run on (in this case, a 'compose') and the '-i' flag is for the item we're going to run against. The value passed in via the '-i' flag gets referenced by the task in task.yml as '${compose}' which corresponds to the 'message_type' passed in via the '-t' flag.

7) Report to resultsDB

The actual reporting of the results to resultsDB is handled in the task itself, but the job runner (buildslave) will report that it's completed running the task to the buildmaster so it knows that the buildslave is available for another task.

Conclusion

That's the basic overview for how data flows through Taskotron. As one final note, I've also added a graphic showing actual data, and what it looks like at each stage. Drop by the #fedora-qa channel on Freenode if you have any questions! Happy hacking!

- ↑ These are the only fields we care about for this example. These fields are inside the 'msg' block of the emitted fedmsg. You can see a full example here.

- ↑ There is a finite number of available types to choose from, and these are all located in the libtaskotron.check component, and determines what type of report (reporttype) is sent to resultsDB.

- ↑ Taskotron mirrors task repos with GrokMirror. All existing tasks currently running can be found in the taskotron ansible repo: https://infrastructure.fedoraproject.org/cgit/ansible.git/tree/inventory/group_vars/taskotron-prod#n19

- ↑ https://pagure.io/taskotron/task-upstream-atomic and https://pagure.io/taskotron/task-fedora-cloud-tests

- ↑ Astute readers will also see the use of '- ignorereturn' which causes the runner to continue if the invoked command fails for some reason.

- ↑ A local run would look like this:

"runtask -t compose -i <url to qcow2 image> runtask.yml"