No edit summary |

(Update infos) |

||

| (10 intermediate revisions by 2 users not shown) | |||

| Line 10: | Line 10: | ||

=== | === Technologies we'll use === | ||

* Virtualization : KVM | * Virtualization : KVM | ||

* Platform management : Ovirt (http://ovirt.org) | * Platform management : Ovirt (http://ovirt.org) | ||

* Storage : ISCSI share | * Storage : ISCSI share | ||

* Disk management : LVM | * Disk management : LVM | ||

* OS provisioning : Cobbler | |||

=== Cloud instances === | |||

=== Cloud | |||

Currently, the cloud instance is running under a ovirt-appliance provided by the ovirt team.<BR> | Currently, the cloud instance is running under a ovirt-appliance provided by the ovirt team.<BR> | ||

We need to know how we will deploy the cloud instance in fedora infrastructure.<BR> | We need to know how we will deploy the cloud instance in fedora infrastructure.<BR> | ||

* Use configured ovirt-appliance (just like the test instance) | * Use configured ovirt-appliance (just like the test instance) | ||

* Deploy and manage all apps that ovirt uses (cobbler, collectd, db, etc) | ** All oVIRT services all-in-one box. | ||

<BR> | |||

* Deploy and manage all apps that ovirt uses (cobbler, collectd, db, etc) | |||

** 1 box for oVIRT UI (physical or virtual). Load balanced. | |||

** 1 box for Cobbler (physical or virtual). | |||

** 1 box for PXE boot (virtual would be good enough). We can think to mix it with Cobbler box. | |||

** 1 box for Database (dedicated to prevent overload issue). | |||

** x box(es) for oVIRT node(s) (dedicated as described below). | |||

<BR> | |||

i'm for the second choice. | |||

=== Hardware === | |||

The nodes will be diskless 16G RAM, 2X Quad Core 1U x3550's. The storage nodes will be 2U boxes of a similar type. | |||

=== Network === | |||

For the initial rollout there will be two networks. | |||

First network will be connected via an external IP pool space of about 80 IP's (No eta on this yet). | |||

The second network will be a combined storage and management network. It'll be in private IP space (10.something). This is where the storage network will be as well as overall management. We only have one switch for the initial rollout, vlan will take care of the rest. | |||

For future rollouts we may add an additional switch, use multipath, etc. | |||

=== Temporary repo === | |||

Redhat<BR> | |||

* [http://download.tuxfamily.org/lxtnow/redhat/5/i386 rhel_i386] | |||

* rhel_x86_64 (not yet)<BR> | |||

<BR> | |||

Fedora<BR> | |||

* fc10 (not yet) :: Ovirt repo can be use at this time. | |||

* fc11 (not yet) :: Ovirt repo should do the trick as well (tested). | |||

== oVIRT == | == oVIRT == | ||

| Line 49: | Line 81: | ||

==== Authentication (web access) ==== | ==== Authentication (web access) ==== | ||

The default authentication for ovirt is handles by krb5 | The default authentication for ovirt is handles by krb5 through LDAP database (it's also includes a IPA instance). It's not the way we want to follow.<BR> | ||

Fedora people should be able to log in through their FAS account.<BR> | Fedora people should be able to log in through their FAS account (or trusted CA ?).<BR> | ||

Now i'm wondering if we'll follow that way which will imply to hack a bit ovirt (e.g don't let fedora people see Redhat pool through the web interface) or just handle a trac instance where they could request a virtual machine or again, by apply to a new-cloud-specific group with additional tools which will handle | Now i'm wondering if we'll follow that way which will imply to hack a bit ovirt (e.g don't let fedora people see Redhat pool through the web interface) or just handle a trac instance where they could request a virtual machine or again, by apply to a new-cloud-specific group with additional tools which will handle vms. | ||

* Current ETA: | * Current ETA: | ||

I currently setup the web interface to deals with Apache Authentication.<BR> | I currently setup the web interface to deals with Apache Authentication.<BR> | ||

The password | The password file is stored in /srv<BR> | ||

==== User and Permissions management ==== | ==== User and Permissions management ==== | ||

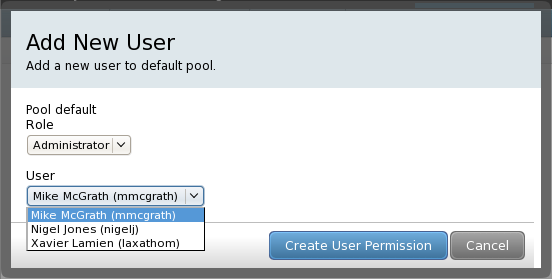

Ovirt fetch only registered LDAP users in the pop-up window.<BR> | |||

As we use FAS2, we'll make ovirt to deal with FAS account to have something like this | |||

through the webUI.<BR> | |||

<BR> | |||

Here is a capture of the result after hacked the code a bit :<BR> | |||

[[Image:ovirt-users_permissions_with_fas2.png]] | |||

<BR> | |||

<BR> | |||

Now, users in that list can only be approved or administrator members from the sysadmin-cloud | |||

group (which has been created for the cloud instance).<BR> | |||

As said above, the web access could be forbidden for fedora standard people.<BR> | |||

<BR>[to edit] | |||

==== Hosts management ==== | ==== Hosts management (part of ovirt nodes) ==== | ||

Ovirt is able to manage different hosts from different places.<BR> | Ovirt is able to manage different hosts from different places but can't be shared between hardware pools.<BR> | ||

Hosts informations are indexes and stored in its database (table Host).<BR> | Hosts informations are indexes and stored in its database (table Host).<BR> | ||

From all i know, you cannot register any hosts from the web interface | From all i know, you cannot register any hosts from the web interface.<BR> | ||

You only able to add hosts from available registered hosts | You only able to add hosts from available registered hosts to [hardware|virtual|smart] pools or anythings else you would like to dream about. | ||

* How to register hosts | * How to register hosts | ||

You | |||

'''Packages requirement:'''<BR> | |||

ovirt-node | |||

ovirt-node-selinux | |||

ovirt-node-statefull | |||

libvirt-qpid ''## libvirt connector for Qpid and QMF which are used by ovirt-server | |||

collectd-virt ''## libvirt plugin for collectd'' | |||

'''Auth requirement:'''<BR> | |||

You need to register your hosts by generating the authentication file via ovirt-add-host shell-script. | |||

Then load this file to authenticate your host.<BR> | |||

This part should be move out or bind to generated CA.<BR> | |||

''' Puppet files:'''<BR> | |||

/etc/collecd.conf ''## from where you will add ovirt-server-side and libvirtd infos'' | |||

/etc/libvirt/qemu.conf | |||

/etc/sasl2/libvirt.conf | |||

/etc/sysconfig/libvirt-qpid ''## from where you'll add ovirt-server side information (host + port num)'' | |||

<BR> | |||

''' iptables issues:'''<BR> | |||

Default Port num which will need to be open<BR> | |||

7777:tcp ''## for ovirt-listen-awake daemon (tells to ovirt-server that the node is available)'' | |||

16509:tcp ''## for libvirtd daemon'' | |||

5900-6000:tcp ''## vnc connection from client-side | |||

49152-49216:tcp ''## for libvirt migration'' | |||

Host-side registration (/var/log/ovirt.log)<BR> | |||

Starting wakeup conversation. | |||

Retrieving keytab: 'http://management.priv.ovirt.org/ipa/config/192.168.50.3-libvirt.tab' | |||

Disconnecting. | |||

Sending oVirt Node details to server. | |||

Finished! | |||

==== Storage management ==== | ==== Storage management ==== | ||

For its initial rollout storage will be done on two servers each with 4T of usable space. One will be master and export its data via iscsi to the nodes. The other will be a secondary storage (replicated to via iscsi and software raid). This is not an HA setup but is a data redundancy setup so if one fails, we won't lose any data. | |||

==== Pools management ==== | ==== Pools management ==== | ||

We will have different pools to dissociate the usage.<BR> | |||

So, a pool to handle all redhat VM, one for fedora people, another for specific usage and so on.<BR> | |||

==== Smart Pools ==== | ==== Smart Pools ==== | ||

It's just bookmark-like to manage things easier. | |||

Any oVIRT can create one and have a shortcut menu at the bottom left of the page. | |||

== Cobbler == | == Cobbler == | ||

Cobbler is the way where ovirt handles OS provisioning and profile management. | Cobbler is the way where ovirt handles OS provisioning and profile management. | ||

We'll need to prevent from people question such as :<BR> | We'll need to prevent from people question such as :<BR> | ||

Could we request a specific profile for our vm or it | Could we request a specific profile for our vm or is it just up to us ?<BR> | ||

==== Authentication ==== | ==== Authentication ==== | ||

Latest revision as of 14:14, 15 March 2009

<DRAFT>

Fedora Cloud

This page is has been setup to track down what is actually going on our fedora cloud test instance which is running on xen6 box for now. It will also a start page about what we need to do, what work need to be done.

Overview

Technologies we'll use

- Virtualization : KVM

- Platform management : Ovirt (http://ovirt.org)

- Storage : ISCSI share

- Disk management : LVM

- OS provisioning : Cobbler

Cloud instances

Currently, the cloud instance is running under a ovirt-appliance provided by the ovirt team.

We need to know how we will deploy the cloud instance in fedora infrastructure.

- Use configured ovirt-appliance (just like the test instance)

- All oVIRT services all-in-one box.

- Deploy and manage all apps that ovirt uses (cobbler, collectd, db, etc)

- 1 box for oVIRT UI (physical or virtual). Load balanced.

- 1 box for Cobbler (physical or virtual).

- 1 box for PXE boot (virtual would be good enough). We can think to mix it with Cobbler box.

- 1 box for Database (dedicated to prevent overload issue).

- x box(es) for oVIRT node(s) (dedicated as described below).

i'm for the second choice.

Hardware

The nodes will be diskless 16G RAM, 2X Quad Core 1U x3550's. The storage nodes will be 2U boxes of a similar type.

Network

For the initial rollout there will be two networks.

First network will be connected via an external IP pool space of about 80 IP's (No eta on this yet).

The second network will be a combined storage and management network. It'll be in private IP space (10.something). This is where the storage network will be as well as overall management. We only have one switch for the initial rollout, vlan will take care of the rest.

For future rollouts we may add an additional switch, use multipath, etc.

Temporary repo

Redhat

- rhel_i386

- rhel_x86_64 (not yet)

Fedora

- fc10 (not yet) :: Ovirt repo can be use at this time.

- fc11 (not yet) :: Ovirt repo should do the trick as well (tested).

oVIRT

Puppet Files

You will find below all files which need to be puppet managed.

# In /puppet/config/cloud /etc/ovirt-server/database.yml /etc/ovirt-server/db /etc/ovirt-server/development.rb /etc/ovirt-server/production.rb /etc/ovirt-server/test.rb /etc/sysconfig/ovirt-mongrel-rails /etc/sysconfig/ovirt-rails

# In /puppet/config/web /etc/httpd/conf.d/ovirt-server.conf

[more]

Authentication (web access)

The default authentication for ovirt is handles by krb5 through LDAP database (it's also includes a IPA instance). It's not the way we want to follow.

Fedora people should be able to log in through their FAS account (or trusted CA ?).

Now i'm wondering if we'll follow that way which will imply to hack a bit ovirt (e.g don't let fedora people see Redhat pool through the web interface) or just handle a trac instance where they could request a virtual machine or again, by apply to a new-cloud-specific group with additional tools which will handle vms.

- Current ETA:

I currently setup the web interface to deals with Apache Authentication.

The password file is stored in /srv

User and Permissions management

Ovirt fetch only registered LDAP users in the pop-up window.

As we use FAS2, we'll make ovirt to deal with FAS account to have something like this

through the webUI.

Here is a capture of the result after hacked the code a bit :

Now, users in that list can only be approved or administrator members from the sysadmin-cloud

group (which has been created for the cloud instance).

As said above, the web access could be forbidden for fedora standard people.

[to edit]

Hosts management (part of ovirt nodes)

Ovirt is able to manage different hosts from different places but can't be shared between hardware pools.

Hosts informations are indexes and stored in its database (table Host).

From all i know, you cannot register any hosts from the web interface.

You only able to add hosts from available registered hosts to [hardware|virtual|smart] pools or anythings else you would like to dream about.

- How to register hosts

Packages requirement:

ovirt-node ovirt-node-selinux ovirt-node-statefull libvirt-qpid ## libvirt connector for Qpid and QMF which are used by ovirt-server collectd-virt ## libvirt plugin for collectd

Auth requirement:

You need to register your hosts by generating the authentication file via ovirt-add-host shell-script.

Then load this file to authenticate your host.

This part should be move out or bind to generated CA.

Puppet files:

/etc/collecd.conf ## from where you will add ovirt-server-side and libvirtd infos /etc/libvirt/qemu.conf /etc/sasl2/libvirt.conf /etc/sysconfig/libvirt-qpid ## from where you'll add ovirt-server side information (host + port num)

iptables issues:

Default Port num which will need to be open

7777:tcp ## for ovirt-listen-awake daemon (tells to ovirt-server that the node is available) 16509:tcp ## for libvirtd daemon 5900-6000:tcp ## vnc connection from client-side 49152-49216:tcp ## for libvirt migration

Host-side registration (/var/log/ovirt.log)

Starting wakeup conversation. Retrieving keytab: 'http://management.priv.ovirt.org/ipa/config/192.168.50.3-libvirt.tab' Disconnecting. Sending oVirt Node details to server. Finished!

Storage management

For its initial rollout storage will be done on two servers each with 4T of usable space. One will be master and export its data via iscsi to the nodes. The other will be a secondary storage (replicated to via iscsi and software raid). This is not an HA setup but is a data redundancy setup so if one fails, we won't lose any data.

Pools management

We will have different pools to dissociate the usage.

So, a pool to handle all redhat VM, one for fedora people, another for specific usage and so on.

Smart Pools

It's just bookmark-like to manage things easier. Any oVIRT can create one and have a shortcut menu at the bottom left of the page.

Cobbler

Cobbler is the way where ovirt handles OS provisioning and profile management.

We'll need to prevent from people question such as :

Could we request a specific profile for our vm or is it just up to us ?

Authentication

We'll also need to bind it to FAS

</DRAFT>