m (→Smart-Proxy) |

m (→SELinux) |

||

| (98 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

This Wiki provides the Open Source and Red Hat communities with a guide to deploy OpenStack infrastructures using Puppet/Foreman system management solution. | |||

We are describing how to deploy and provision the management system itself and how to use it to deploy OpenStack Controller and OpenStack Compute nodes. | |||

{{admon/note|Note| This information has been gathered from real OpenStack lab tests using the latest data available at the time of writing.}} | |||

= Introduction = | |||

== Assumptions == | |||

* Upstream OpenStack based on Folsom (2012.2) from EPEL6 | * Upstream OpenStack based on Folsom (2012.2) from EPEL6 | ||

| Line 21: | Line 21: | ||

}} | }} | ||

== Definitions == | |||

{| | {| | ||

| Line 36: | Line 36: | ||

|} | |} | ||

= Architecture = | |||

The idea is to have a Management system to be able to quickly deploy OpenStack Controllers or OpenStack Compute nodes. | The idea is to have a Management system to be able to quickly deploy OpenStack Controllers or OpenStack Compute nodes. | ||

== OpenStack Components == | |||

An Openstack Controller Server regroups the following OpenStack modules: | An Openstack Controller Server regroups the following OpenStack modules: | ||

| Line 62: | Line 60: | ||

* Libvirt and dependant packages | * Libvirt and dependant packages | ||

== Environment == | |||

The following environment has been tested to validate all the procedures described in this document: | The following environment has been tested to validate all the procedures described in this document: | ||

| Line 77: | Line 75: | ||

* One NIC per physical machine with simulated interfaces (VLANs or alias) should work but has not been tested.}} | * One NIC per physical machine with simulated interfaces (VLANs or alias) should work but has not been tested.}} | ||

== | == Workflow == | ||

The goal is to achieve the OpenStack deployment in four steps: | The goal is to achieve the OpenStack deployment in four steps: | ||

| Line 85: | Line 83: | ||

# Manage each OpenStack node to be either a Controller or a Compute node | # Manage each OpenStack node to be either a Controller or a Compute node | ||

= RHEL Core: Common definitions = | |||

The Management server itself is based upon the RHEL Core so we define it first. | The Management server itself is based upon the RHEL Core so we define it first. | ||

| Line 99: | Line 97: | ||

IPV6 is not required. Meanwhile for kernel dependencies and performance reasons we recommend to not deactivate the IPV6 module unless you know what you're doing.}} | IPV6 is not required. Meanwhile for kernel dependencies and performance reasons we recommend to not deactivate the IPV6 module unless you know what you're doing.}} | ||

== | == NTP == | ||

The NTP service is required and included during the deployment of OpenStack components. | The NTP service is required and included during the deployment of OpenStack components. | ||

| Line 108: | Line 106: | ||

* Using the same time zone | * Using the same time zone | ||

* On time, less than 5 minutes delay from each others | * On time, with less than 5 minutes delay from each others | ||

== Yum Repositories == | |||

Activate the following repositories: | Activate the following repositories: | ||

* RHEL6 Server Optional RPMS | * RHEL6 Server Optional RPMS | ||

* PuppetLabs | |||

* EPEL6 | * EPEL6 | ||

<pre> | <pre> | ||

rpm -Uvh http://yum.puppetlabs.com/el/6/products/i386/puppetlabs-release-6-7.noarch.rpm | |||

rpm -Uvh http://download.fedoraproject.org/pub/epel/6/x86_64/epel-release-6-8.noarch.rpm | rpm -Uvh http://download.fedoraproject.org/pub/epel/6/x86_64/epel-release-6-8.noarch.rpm | ||

yum-config-manager --enable rhel-6-server-optional-rpms | yum-config-manager --enable rhel-6-server-optional-rpms --enable epel --enable puppetlabs-products --enable puppetlabs-deps | ||

yum clean | yum clean expire-cache | ||

</pre> | </pre> | ||

| Line 128: | Line 128: | ||

</pre> | </pre> | ||

== SELinux== | |||

At the time of writing, SELinux rules have not been fully validated for: | |||

* Foreman using the automated installation | |||

* OpenStack | |||

This is an ongoing work. | |||

{{admon/note|Note| if you plan do to the manual installation of the management server (further down) then you can skip this.}} | {{admon/note|Note| if you plan do to the manual installation of the management server (further down) then you can skip this.}} | ||

So in the meantime, we need to activate SELinux in permissive mode: | |||

<pre> | <pre> | ||

setenforce 0 | setenforce 0 | ||

| Line 145: | Line 146: | ||

<pre> | <pre> | ||

SELINUX = permissive | SELINUX = permissive | ||

SELINUXTYPE=targeted | SELINUXTYPE = targeted | ||

</pre> | </pre> | ||

== FQDN == | |||

Make sure every host can resolve the Fully Qualified Domain Name of the management server is defined in available DNS or alternatively use the /etc/hosts file. | Make sure every host can resolve the Fully Qualified Domain Name of the management server is defined in available DNS or alternatively use the /etc/hosts file. | ||

== Puppet Agent == | |||

The puppet agent must be installed on every host and be configured in order to: | The puppet agent must be installed on every host and be configured in order to: | ||

| Line 206: | Line 207: | ||

</pre> | </pre> | ||

= | = Automated Installation of Management Server = | ||

Let's get started with | Let's get started with the automated deployment of Puppet-Foreman application suite in order to manage our OpenStack infrastructure. | ||

{{admon/note|Note|The manual installation method is described here: | |||

[[How_to_Deploy_Puppet_Foreman_on_RHEL6_manually]]}} | |||

The Automated installation of the Management server provides: | The Automated installation of the Management server provides: | ||

* Puppet Master | * Puppet Master | ||

* | * HTTP service with Apache SSL and Passenger | ||

* Foreman Proxy (Smart-proxy) and Foreman | * Foreman Proxy (Smart-proxy) and Foreman | ||

* No SELinux | * No SELinux | ||

| Line 232: | Line 222: | ||

Before starting, make sure the "RHEL Core: Common definitions" described earlier have been applied. | Before starting, make sure the "RHEL Core: Common definitions" described earlier have been applied. | ||

In case there are several network interfaces on the Management machine, Foreman HTTPS service is to be activated on the desired interface by either: | |||

* Deactivate all interfaces but the desired one. Be careful not to cut yourself out! | |||

or | |||

* After the installation, replace with IP of choice the "<VirtualHost IP:80>" and "<VirtualHost IP:443>" records in the /etc/httpd/conf.d/foreman.conf file | |||

Using Puppet itself onto the Management machine to install the Foreman suite: | |||

<pre> | <pre> | ||

# Get packages | # Get packages | ||

yum install -y | yum install -y git | ||

# Get foreman-installer modules | # Get foreman-installer modules | ||

| Line 244: | Line 237: | ||

# Install | # Install | ||

puppet -v --modulepath=/root/foreman-installer -e "include puppet, puppet::server, passenger, foreman_proxy, foreman" | puppet apply -v --modulepath=/root/foreman-installer -e "include puppet, puppet::server, passenger, foreman_proxy, foreman" | ||

</pre> | </pre> | ||

At this stage, Foreman should be accessible on your Management host on HTTPS: https://host1.example.org. | |||

You will be prompted to sign-on: use default user “admin” with the password “changeme”. | |||

Foreman should be accessible | |||

Note: If you're using a Firewall you must open HTTPS(443) port to access the GUI. | |||

== Optional Database Backend == | |||

Please refer to this page if you'd like to use mysql or postgresql as backend for Puppet and Foreman: | |||

[[How_to_Puppet_Foreman_Mysql_or_Postgresql_on_RHEL6]] | |||

= Foreman Configuration = | |||

For OpenStack deployments, we need Foreman to: | |||

* Setup smart-proxy (Foreman-proxy) | |||

* Define globals variables | |||

* Download Puppet Modules | |||

* Declare hostgroups | |||

Those steps have been scripted using Foreman API. | |||

{{admon/note| | {{admon/note|Important|The script must be run (for now) from the Management server itself, as root}} | ||

Download the script: | |||

<pre> | <pre> | ||

git clone https://github.com/gildub/foremanopenstack-setup /tmp/setup-foreman | |||

cd /tmp/setup-foreman | |||

</pre> | </pre> | ||

Then edit foreman-params.json file to and change the following to needs: | |||

* The host section with your foreman hostname and user/passwd if you have changed them | |||

* The proxy name can be anything you'd like, the host is the same as foreman FQDN name, with ssl and port 8443. | |||

* The globals are your OpenStack values, adjust all passwords, tokens, network ranges and NICs values to you needs. | |||

Notes: Be careful with JSON syntax - It doesn't like a colon at the wrong place! | |||

Lets launch the script to configure Foreman with our parameters: | |||

<pre> | <pre> | ||

./foreman-setup.rb -l logfile all | |||

</pre> | </pre> | ||

Check the log file to confirm there wasn't any error. | |||

[[ | {{admon/note|Note|The above process can also be done manually using Foreman GUI, it's described here: | ||

[[How_to_Deploy_Openstack_Setup_Foreman_on_RHEL6_manually]]}} | |||

= Manage Hosts = | |||

To make a system part of our OpenStack infrastructure we have to: | To make a system part of our OpenStack infrastructure we have to: | ||

| Line 609: | Line 293: | ||

* Assign the host either the openstack-controller or openstack-compute Host Group | * Assign the host either the openstack-controller or openstack-compute Host Group | ||

== Register Host Certificates == | |||

=== Using Autosign === | |||

With autosign option, the hosts can be automatically registered and visible from Foreman by | With autosign option, the hosts can be automatically registered and visible from Foreman by | ||

adding the hostnames to the /etc/puppet/autosign.conf file. | adding the hostnames to the /etc/puppet/autosign.conf file. | ||

=== Signing Certificates === | |||

If you're not using the autosign option then you will have to sign the host certificate, using either: | If you're not using the autosign option then you will have to sign the host certificate, using either: | ||

* Foreman GUI | * Foreman GUI | ||

| Line 637: | Line 321: | ||

<pre>puppetca sign host3.example.org</pre> | <pre>puppetca sign host3.example.org</pre> | ||

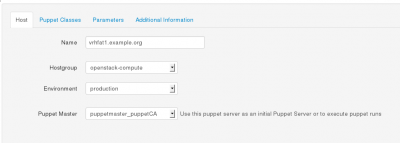

== Assign a Host Group == | |||

Display the hosts using the “Hosts” button at the top Foreman GUI screen. | Display the hosts using the “Hosts” button at the top Foreman GUI screen. | ||

| Line 649: | Line 333: | ||

Save by hitting the “Submit” button. | Save by hitting the “Submit” button. | ||

== Deploy OpenStack Components == | |||

We are done! | We are done! | ||

Revision as of 06:27, 26 April 2013

This Wiki provides the Open Source and Red Hat communities with a guide to deploy OpenStack infrastructures using Puppet/Foreman system management solution.

We are describing how to deploy and provision the management system itself and how to use it to deploy OpenStack Controller and OpenStack Compute nodes.

Introduction

Assumptions

- Upstream OpenStack based on Folsom (2012.2) from EPEL6

- The Operating System is Red Hat Enterprise Linux - RHEL6.4+. All machines (Virtual or Physical) have been provisioned with a base RHEL6 system and up to date.

- The system management is based on Foreman 1.1 from the Foreman Yum Repo and Puppet 2.6.17 from the Extra Packages for Enterprise Linux 6 (EPEL6)/

- Foreman provides full system provisioning, meanwhile this is not covered here, at least for now.

- Foreman Smart-proxy runs on the same host as Foreman. Please adjust accordingly if running on a separate host.

Definitions

| Name | Description |

|---|---|

| Host Group | Foreman definition grouping environment, Puppet Classes and variables

together to be inherited by hosts. |

| OpenStack Controller node | Server with all OpenStack modules to manage OpenStack Compute nodes |

| OpenStack Compute node | Server OpenStack Nova Compute and Nova Network modules providing OpenStack Cloud Instances |

| RHEL Core | Base Operation System installed with standard RHEL packages and specific configuration required by all systems (or hosts) |

Architecture

The idea is to have a Management system to be able to quickly deploy OpenStack Controllers or OpenStack Compute nodes.

OpenStack Components

An Openstack Controller Server regroups the following OpenStack modules:

- OpenStack Nova Keystone, the identity server

- OpenStack Nova Glance, the image repository

- OpenStack Nova Scheduler

- OpenStack Nova Horizon, the dashboard

- OpenStack Nova API

- QPID the AMQP Messaging Broker

- Mysql backend

- An OpenStack-Compute

An OpenStack Compute consists of the following modules:

- OpenStack Nova Compute

- OpenStack Nova Network

- OpenStack Nova API

- Libvirt and dependant packages

Environment

The following environment has been tested to validate all the procedures described in this document:

- Management System: both physical or virtual machine

- OpenStack controller: physical machine

- OpenStack compute nodes: several physical machines

Workflow

The goal is to achieve the OpenStack deployment in four steps:

- Deploy the system management solution Foreman

- Prepare Foreman for OpenStack

- Deploy the RHEL core definition with Puppet agent on participating OpenStack nodes

- Manage each OpenStack node to be either a Controller or a Compute node

RHEL Core: Common definitions

The Management server itself is based upon the RHEL Core so we define it first.

In the rest of this documentation we assume that every system:

- Is using the latest Red Hat Enterprise Linux version 6.x. We have tested with RHEL6.4.

- Be registered and subscribed with an Red Hat account, either RHN Classic or RHSM. We have tested with RHSM.

- Has been updated with latest packages

- Has the been configured with the following definitions

NTP

The NTP service is required and included during the deployment of OpenStack components.

Meanwhile for Puppet to work properly with SSL, all the physical machines must have their clock in sync.

Make sure all the hardware clocks are:

- Using the same time zone

- On time, with less than 5 minutes delay from each others

Yum Repositories

Activate the following repositories:

- RHEL6 Server Optional RPMS

- PuppetLabs

- EPEL6

rpm -Uvh http://yum.puppetlabs.com/el/6/products/i386/puppetlabs-release-6-7.noarch.rpm rpm -Uvh http://download.fedoraproject.org/pub/epel/6/x86_64/epel-release-6-8.noarch.rpm yum-config-manager --enable rhel-6-server-optional-rpms --enable epel --enable puppetlabs-products --enable puppetlabs-deps yum clean expire-cache

We need the Augeas utility for manipulating configuration files:

yum -y install augeas

SELinux

At the time of writing, SELinux rules have not been fully validated for:

- Foreman using the automated installation

- OpenStack

This is an ongoing work.

So in the meantime, we need to activate SELinux in permissive mode:

setenforce 0

And make it persistent in /etc/selinux/config file:

SELINUX = permissive SELINUXTYPE = targeted

FQDN

Make sure every host can resolve the Fully Qualified Domain Name of the management server is defined in available DNS or alternatively use the /etc/hosts file.

Puppet Agent

The puppet agent must be installed on every host and be configured in order to:

- Point to the Puppet Master which is our Management server

- Have Puppet plug-ins activated

The following commands make that happen:

PUPPETMASTER="puppet.example.org" yum install -y puppet # Set PuppetServer augtool -s set /files/etc/puppet/puppet.conf/agent/server $PUPPETMASTER # Puppet Plugins augtool -s set /files/etc/puppet/puppet.conf/main/pluginsync true

Afterwards, the /etc/puppet/puppet.conf file should look like this:

[main] # The Puppet log directory. # The default value is '$vardir/log'. logdir = /var/log/puppet # Where Puppet PID files are kept. # The default value is '$vardir/run'. rundir = /var/run/puppet # Where SSL certificates are kept. # The default value is '$confdir/ssl'. ssldir = $vardir/ssl pluginsync=true [agent] # The file in which puppetd stores a list of the classes # associated with the retrieved configuratiion. Can be loaded in # the separate ``puppet`` executable using the ``--loadclasses`` # option. # The default value is '$confdir/classes.txt'. classfile = $vardir/classes.txt # Where puppetd caches the local configuration. An # extension indicating the cache format is added automatically. # The default value is '$confdir/localconfig'. localconfig = $vardir/localconfig server=puppet.example.org

Automated Installation of Management Server

Let's get started with the automated deployment of Puppet-Foreman application suite in order to manage our OpenStack infrastructure.

The Automated installation of the Management server provides:

- Puppet Master

- HTTP service with Apache SSL and Passenger

- Foreman Proxy (Smart-proxy) and Foreman

- No SELinux

Before starting, make sure the "RHEL Core: Common definitions" described earlier have been applied.

In case there are several network interfaces on the Management machine, Foreman HTTPS service is to be activated on the desired interface by either:

- Deactivate all interfaces but the desired one. Be careful not to cut yourself out!

or

- After the installation, replace with IP of choice the "<VirtualHost IP:80>" and "<VirtualHost IP:443>" records in the /etc/httpd/conf.d/foreman.conf file

Using Puppet itself onto the Management machine to install the Foreman suite:

# Get packages yum install -y git # Get foreman-installer modules git clone --recursive https://github.com/theforeman/foreman-installer.git /root/foreman-installer # Install puppet apply -v --modulepath=/root/foreman-installer -e "include puppet, puppet::server, passenger, foreman_proxy, foreman"

At this stage, Foreman should be accessible on your Management host on HTTPS: https://host1.example.org. You will be prompted to sign-on: use default user “admin” with the password “changeme”.

Note: If you're using a Firewall you must open HTTPS(443) port to access the GUI.

Optional Database Backend

Please refer to this page if you'd like to use mysql or postgresql as backend for Puppet and Foreman:

How_to_Puppet_Foreman_Mysql_or_Postgresql_on_RHEL6

Foreman Configuration

For OpenStack deployments, we need Foreman to:

- Setup smart-proxy (Foreman-proxy)

- Define globals variables

- Download Puppet Modules

- Declare hostgroups

Those steps have been scripted using Foreman API.

Download the script:

git clone https://github.com/gildub/foremanopenstack-setup /tmp/setup-foreman cd /tmp/setup-foreman

Then edit foreman-params.json file to and change the following to needs:

- The host section with your foreman hostname and user/passwd if you have changed them

- The proxy name can be anything you'd like, the host is the same as foreman FQDN name, with ssl and port 8443.

- The globals are your OpenStack values, adjust all passwords, tokens, network ranges and NICs values to you needs.

Notes: Be careful with JSON syntax - It doesn't like a colon at the wrong place!

Lets launch the script to configure Foreman with our parameters:

./foreman-setup.rb -l logfile all

Check the log file to confirm there wasn't any error.

Manage Hosts

To make a system part of our OpenStack infrastructure we have to:

- Make sure the host follows the Common Core definitions – See RHEL Core: Common definitions section above

- Have the host's certificate signed so it's registered with the Management server

- Assign the host either the openstack-controller or openstack-compute Host Group

Register Host Certificates

Using Autosign

With autosign option, the hosts can be automatically registered and visible from Foreman by adding the hostnames to the /etc/puppet/autosign.conf file.

Signing Certificates

If you're not using the autosign option then you will have to sign the host certificate, using either:

- Foreman GUI

Get on the Smart Proxies window from the menu "More -> Configuration -> Smart Proxies". And select the "Certificates" from the drop-down button of the smart-proxy you created:

From there you can manage all the hosts certificates and get them signed.

- The Command Line Interface

Assuming the Puppet agent (puppetd) is running on the host, the host certificate would have been created on the Puppet Master and will be waiting to be signed: From the Puppet Master host, use the “puppetca” tool with the command “list” to see the waiting certificates, for example:

# puppetca list "host3.example.org" (84:AE:80:D2:8C:F5:15:76:0A:1A:4C:19:A9:B6:C1:11)

To sign a certificate, use the “sign” command and provide the hostame, for example:

puppetca sign host3.example.org

Assign a Host Group

Display the hosts using the “Hosts” button at the top Foreman GUI screen.

Then select the corresponding “Edit Host” drop-down button on the right side of the targeted host.

Assign the right environment and attach the appropriate Host Group to that host in order to make it a Controller or a Compute node.

Save by hitting the “Submit” button.

Deploy OpenStack Components

We are done!

The OpenStack components will be installed when the Puppet agent synchronises with the Management server. Effectively, the classes will be applied when the agent retrieves the catalog from the Master and runs it.

You can also manually trigger the agent to check with the puppetmaster, to do so deactivate the agent on the targeted controller node run:

service puppet stop

And run it manually:

puppet agent –verbose --no-daemonize