< User:Crantila | FSC

(finished first complete version) |

m (changed location of images) |

||

| Line 7: | Line 7: | ||

=== Busses, Master Bus, and Sub-master Bus === | === Busses, Master Bus, and Sub-master Bus === | ||

<!-- [[File:FMG-bus.xcf]] --> | |||

<!-- [[File:FMG-master_sub_bus.xcf]] --> | |||

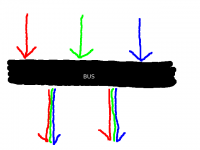

[[File:FMG-bus.png|200px|How audio busses work.]] | |||

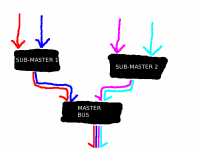

[[File:FMG-master_sub_bus.png|200px|The relationship between the master bus and sub-master busses.]] | |||

An '''audio bus''' is used to send an audio signal from one place to another. Like a highway is used to collect all sorts of automobiles going in the same general direction, an audio bus is collects all sorts of signals going in the same direction (usually into or out of the DAW, but sometimes between parts of the DAW). Unlike a highway, however, once audio enters a bus, it cannot be separated. | An '''audio bus''' is used to send an audio signal from one place to another. Like a highway is used to collect all sorts of automobiles going in the same general direction, an audio bus is collects all sorts of signals going in the same direction (usually into or out of the DAW, but sometimes between parts of the DAW). Unlike a highway, however, once audio enters a bus, it cannot be separated. | ||

| Line 12: | Line 17: | ||

A '''sub-master bus''' collects audio signals before they're inputted to the master bus. Using a sub-master bus is optional, and it allows you to subject more than one track to the same adjustment, without affecting all the tracks. A sub-master bus is often simply referred to as a bus. | A '''sub-master bus''' collects audio signals before they're inputted to the master bus. Using a sub-master bus is optional, and it allows you to subject more than one track to the same adjustment, without affecting all the tracks. A sub-master bus is often simply referred to as a bus. | ||

=== Level (Volume/Loudness) === | === Level (Volume/Loudness) === | ||

| Line 36: | Line 36: | ||

=== Panning and Balance === | === Panning and Balance === | ||

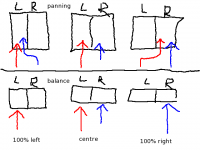

[[File:FMG-Balance_and_Panning.png|200px|left|The difference between adjusting panning and adjusting balance.]] | |||

<!-- [[File:FMG-Balance_and_Panning.xcf]] --> | |||

'''Panning''' allows you to adjust the portion of a channel's signal is sent through each output channel. Assuming a stereophonic (two-channel) setup, the two channels will represent the "left" and the "right" speakers. The DAW will have two channels of audio recorded, and a default setup might send all of the "left" recorded channel to the "left" output channel, and all of the "right" recorded channel to the "right" output channel. Panning allows you to adjust this, sending some of the left recorded channel's volume to the right output channel, for example. It's important to recognize that this relies on each recorded channel having a constant total output level, which is divided between the two output channels. | '''Panning''' allows you to adjust the portion of a channel's signal is sent through each output channel. Assuming a stereophonic (two-channel) setup, the two channels will represent the "left" and the "right" speakers. The DAW will have two channels of audio recorded, and a default setup might send all of the "left" recorded channel to the "left" output channel, and all of the "right" recorded channel to the "right" output channel. Panning allows you to adjust this, sending some of the left recorded channel's volume to the right output channel, for example. It's important to recognize that this relies on each recorded channel having a constant total output level, which is divided between the two output channels. | ||

| Line 43: | Line 46: | ||

The balance is not generally adjusted until final play-back. The effect is intended to be used to compensate for poorly set-up listening environments, where the speakers are not equal distances from the listner. If the left speaker is closer to the listener than the right speaker, for example, the listener could adjust the balance to the right, so that the volume level of the left speaker would be lower. When the listener does this, they allow an equal perceived loudness to reach their ears from both speakers. For many reasons, this solution is not ideal: it is better to have properly set-up speakers, but sometimes it is also impossible or impractical. | The balance is not generally adjusted until final play-back. The effect is intended to be used to compensate for poorly set-up listening environments, where the speakers are not equal distances from the listner. If the left speaker is closer to the listener than the right speaker, for example, the listener could adjust the balance to the right, so that the volume level of the left speaker would be lower. When the listener does this, they allow an equal perceived loudness to reach their ears from both speakers. For many reasons, this solution is not ideal: it is better to have properly set-up speakers, but sometimes it is also impossible or impractical. | ||

=== Time, Timeline and Time-Shifting === | === Time, Timeline and Time-Shifting === | ||

| Line 62: | Line 62: | ||

=== Routing and Multiplexing === | === Routing and Multiplexing === | ||

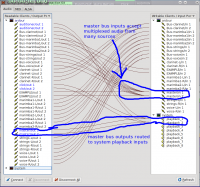

[[File:FMG-routing_and_multiplexing.png|200px|left|Illustration of routing and multiplexing in the "Connections" window of the QjackCtl interface.]] | |||

<!-- [[FMG-routing_and_multiplexing.xcf]] --> | |||

'''Routing''' audio is transmitting a signal from one place to another - between applications, between parts of applications, or between devices. On GNU/Linux systems, audio routing in audio creation applications is normally achieved with the JACK Audio Connection Kit. JACK-aware applications (and PulseAudio ones, if so configured) provide the JACK server with different inputs and outputs depending on the current application's configuration. Most applications connect themselves in a default arrangement, but they can always be re-configured with the QjackCtl graphical interface, and often within other programs. This allows for maximum flexibility and creative solutions to otherwise-complex problems: you can easily re-route the output of a program like FluidSynth so that it is provided to Ardour, for recording. | '''Routing''' audio is transmitting a signal from one place to another - between applications, between parts of applications, or between devices. On GNU/Linux systems, audio routing in audio creation applications is normally achieved with the JACK Audio Connection Kit. JACK-aware applications (and PulseAudio ones, if so configured) provide the JACK server with different inputs and outputs depending on the current application's configuration. Most applications connect themselves in a default arrangement, but they can always be re-configured with the QjackCtl graphical interface, and often within other programs. This allows for maximum flexibility and creative solutions to otherwise-complex problems: you can easily re-route the output of a program like FluidSynth so that it is provided to Ardour, for recording. | ||

'''Multiplexing''' is a related concept, which allows the connection of multiple inputs and outputs to a single connection. This may not seem like an important or difficult thing to do, but remember that digital, computer-based audio evolved from analogue audio, where a special "multiplexer" device is required to connect multiple devices to one connection. | '''Multiplexing''' is a related concept, which allows the connection of multiple inputs and outputs to a single connection. This may not seem like an important or difficult thing to do, but remember that digital, computer-based audio evolved from analogue audio, where a special "multiplexer" device is required to connect multiple devices to one connection. | ||

=== Multichannel Audio === | === Multichannel Audio === | ||

Revision as of 23:39, 26 July 2010

These terms are used in many different audio contexts. Understanding them is important to knowing how to operate audio equipment in general, whether computer-based or not.

Address: User:Crantila/FSC/Audio_Vocabulary

MIDI Sequencer

A sequencer is a device or software application which produces signals that a synthesizer to turns into sound. Sequencers also allow the editing and arrangement of these signals into a musical composition. The two MIDI-focussed DAWs in the Musicians' Guide, Qtractor and Rosegarden, are well-suited to serve as MIDI sequencers. Furthermore, all three DAWs use MIDI instructions to perform automation.

Busses, Master Bus, and Sub-master Bus

An audio bus is used to send an audio signal from one place to another. Like a highway is used to collect all sorts of automobiles going in the same general direction, an audio bus is collects all sorts of signals going in the same direction (usually into or out of the DAW, but sometimes between parts of the DAW). Unlike a highway, however, once audio enters a bus, it cannot be separated.

All audio being routed out of a program usually passes through the master bus. The master bus collects and consolidates all audio and MIDI tracks, allowing for final level adjustments and for simpler mastering. The primary purpose of the master bus is to mix all of the tracks into two channels.

A sub-master bus collects audio signals before they're inputted to the master bus. Using a sub-master bus is optional, and it allows you to subject more than one track to the same adjustment, without affecting all the tracks. A sub-master bus is often simply referred to as a bus.

Level (Volume/Loudness)

The perceived volume or loudness of a portion of audio is a complex phenomenon, involving many different factors that are too numerous and enigmatic to explain anywhere, let alone here. One widely-agreed method of assessing loudness is by measuring the sound pressure level (SPL), which is measured in Bels or decibels (B or dB). In audio production communities, this is normally referred to simply as "level." The level of an audio signal is one way of measuring the signal's perceived loudness, and it is stored along with the audio signal.

Much controversy exists over how to effectively monitor and adjust levels, partly because it is an aesthetic practice and therefore heavily subjective. Commonly, the average level is designed to be -6dB on the meter, and the maximum level 0dB, but this is not the case with all metering practices.

Throughout the Fedora Musicians' Guide, this term is often called "volume level," to avoid confusion with other levels, and to be clear that this is not referring to perceived volume or loudness.

For more information, refer to these web pages:

- "Level Practices" (the type of meter described here is available in the "jkmeter" package from Planet CCRMA at Home).

- "K-system"

- "Headroom"

- "Equal-loudness contour"

- "Sound level meter"

- "Listener fatigue"

- "Dynamic range compression"

- "Alignment level"

Panning and Balance

Panning allows you to adjust the portion of a channel's signal is sent through each output channel. Assuming a stereophonic (two-channel) setup, the two channels will represent the "left" and the "right" speakers. The DAW will have two channels of audio recorded, and a default setup might send all of the "left" recorded channel to the "left" output channel, and all of the "right" recorded channel to the "right" output channel. Panning allows you to adjust this, sending some of the left recorded channel's volume to the right output channel, for example. It's important to recognize that this relies on each recorded channel having a constant total output level, which is divided between the two output channels.

An audio engineer might initially set the left recorded channel to "full left" panning, which outputs 100% of the output level to the left output channel, and 0% to the right output channel. If they found this setup too tiring for the listener, they might adjust the left recorded channel to output 80% of the signal to the left output channel and 20% of the signal to the right output channel. If they wanted to make the left recorded channel sound like it's in front of the listener, they could set it to "centre" panning, with 50% of the output level being outputted to each output channel.

Balance seems to have a similar effect, but it is not the same as panning. The two terms are sometimes confused on audio equipment and in popular usage. Adjusting the balance allows you to change the volume of the output channels, without redirecting the recorded signal. The default setting for balance should be at "centre," meaning 0% deviation to left or right. As the dial is adjusted towards the "full left" setting, the volume of the left recorded channel and the left output channel remain unchanged, but the volume level of the right output channel is decreased. As the dial is adjusted towards the "full right" setting, the volume of the right recorded channel and the right output channel remain unchanged, but the volume level of the left output channel is decreased. If the dial is adjusted to "20% left," the audio equipment would reduce the volume level of the right channel by 20%, increasing the perceived loudness of the left channel by approximately 20%.

The balance is not generally adjusted until final play-back. The effect is intended to be used to compensate for poorly set-up listening environments, where the speakers are not equal distances from the listner. If the left speaker is closer to the listener than the right speaker, for example, the listener could adjust the balance to the right, so that the volume level of the left speaker would be lower. When the listener does this, they allow an equal perceived loudness to reach their ears from both speakers. For many reasons, this solution is not ideal: it is better to have properly set-up speakers, but sometimes it is also impossible or impractical.

Time, Timeline and Time-Shifting

There are many ways to measure musical time. The four most popular scales for digital audio are:

- Bars and Beats: Usually used for MIDI work, and called "BBT," meaning "Bars, Beats, and Ticks." A tick is a partial beat.

- Minutes and Seconds: Usually used for audio work.

- SMPTE Timecode: Invented for high-precision coordination of audio and video, but can be used with audio alone.

- Samples: Relating directly to the format of the underlying audio file, a sample is the shortest possible length of time in an audio file. See this section for more information on samples.

Most audio software, particularly Digital Audio Workstations (DAWs), allow the user to choose which scale they prefer. They use a timeline to display the progression of time in a session or file, allowing the user to do something called time-shifting, which is adjusting the point in the timeline that a region starts to be played.

Time is usually represented horizontally, with the leftmost point being the beginning of the session (zero, regardless of the unit of measurement), and the rightmost point being some distance after the end of the session.

Synchronization

Synchronization is exactly what it sounds like - synchronizing the operation of multiple tools. Most often this is used to synchronize movement of the transport, and to control automation across applications and devices. This sort of synchronization is typically achieved with MIDI channels that are not used directly for synthesis.

Routing and Multiplexing

Routing audio is transmitting a signal from one place to another - between applications, between parts of applications, or between devices. On GNU/Linux systems, audio routing in audio creation applications is normally achieved with the JACK Audio Connection Kit. JACK-aware applications (and PulseAudio ones, if so configured) provide the JACK server with different inputs and outputs depending on the current application's configuration. Most applications connect themselves in a default arrangement, but they can always be re-configured with the QjackCtl graphical interface, and often within other programs. This allows for maximum flexibility and creative solutions to otherwise-complex problems: you can easily re-route the output of a program like FluidSynth so that it is provided to Ardour, for recording.

Multiplexing is a related concept, which allows the connection of multiple inputs and outputs to a single connection. This may not seem like an important or difficult thing to do, but remember that digital, computer-based audio evolved from analogue audio, where a special "multiplexer" device is required to connect multiple devices to one connection.

Multichannel Audio

An audio channel is a single path for delivering audio data. Multichannel audio is any audio which uses more than one channel simultaneously, allowing the storage and transmission of more audio data than single-channel audio.

Audio was originally recorded with only one channel, producing "monophonic," or "mono" recordings. Beginning in the 1950s, stereophonic recordings, with two independent channels, gradually began replacing monophonic recordings. Since humans have two independent ears, it makes a certain amount of sense to record and reproduce audio with two independent channels, involving two speakers. Most sound recordings available today are stereophonic, and people have found this mostly satisfying.

There is a growing trend, however, towards five- and seven-channel audio, driven primarily by "surround-sound" movies, and not widely available for music. Two transmission formats do exist for music, DVD Audio (DVD-A) and Super Audio CD (SACD). The development of these formats, and the devices to use them, is held back by the proliferation of headphones with personal MP3 players, a general lack of desire for improvement in audio quality amongst consumers, and the copy-protection measures put in place by record labels. The result is that, while some consumers are willing to pay moderately higher prices for these formats, there is a poor selection of recordings available. Even if somebody were to buy a DVD-A or SACD-capable player, they would need to replace the rest of their audio equipment with models that support proprietary copy-protection software, or the player is often forbidden from outputting audio signals at a higher quality than available from a conventional Audio CD. None of these factors, unfortunately, seems about to change in the near future.